Я лично узнал про биткоин давно — майнил его в то время, когда на компьютере еще можно было что-то делать. Своим появлением Ethereum принес масштабную идею: теперь блокчейн-технологии можно применять не только для того, чтобы переизобрести финансовую систему и деньги, а чтобы шире смотреть на вещи. Ethereum добавил виртуальную машину поверх блокчейна, и люди начали рассуждать о большем разнообразии приложений.

По меркам web3 компания сама по себе достаточно старая. Мы начали что-то делать еще в 2017 году. Тогда я и наш кофаундер Дмитрий наблюдали за блокчейном со стороны. Нас завлекла идея переизобрести интернет-инфраструктуру с помощью блокчейна, чтобы заадресить проблемы централизации, владения и контроля инфраструктуры, приложений и сервисов.

Есть DNS в интернете, но ее контролирует американская организация. Ее создали как нейтральную сторону, но она всё же извлекает прибыль, а конкретные люди в ней принимают решения.

Есть клауд-инфраструктура, где хостится существенная часть интернета и все сервисы — а это всего лишь 3 провайдера: Amazon, Google и Microsoft. Когда есть такие гиганты, которые контролируют инфраструктуру, то возникает много болевых точек, в которых что-то может пойти не так. Например, технические проблемы, когда админ или DevOps выключает кластер или дата-центр. Либо государство говорит: «Вы на нашей территории — забаньте этого клиента». По сути какой-то из сотрудников компании может сделать что-то плохое по отношению к клиентам — а ведь их миллионы.

Поэтому централизация контроля — не есть хорошо. И мы стали думать, как изобрести такую блокчейн-инфраструктуру, которая была бы устойчива к централизованным уязвимостям.

Тогда существовал такой стейт проектов: есть Ethereum, и у него есть какое-то количество первых конкурентов. С точки зрения аналогов клауд-компьютинга были такие проекты как StorJ, DFinity и Golem. Собственно, всё. Мы стали копать в сторону всяких баз данных и зашифрованных данных. Мы рисерчили разные стороны и потратили достаточное количество времени, чтоб понять, что имеет смысл делать. В конечном счете пришли к тому, что сейчас является Fluence.

Сейчас Fluence — это децентрализованная компьютерная платформа и маркетплейс вычислительных ресурсов. Это значит, что любой владелец какого-нибудь Raspberry Pi или профессионального дата-центра может присоединиться к сети и быть готовым предоставлять свои ресурсы и зарабатывать копеечку. Поверх этих ресурсов есть девелоперский стек. Наш основной кастомер — это девелопер, который пользуется Fluence по аналогии с клаудами. В клауде есть serverless — это база данных и клауд-функции, которые дают возможность абстрагироваться от железа, на котором это исполняются и не думать про сервера, которые автоматически scalable.

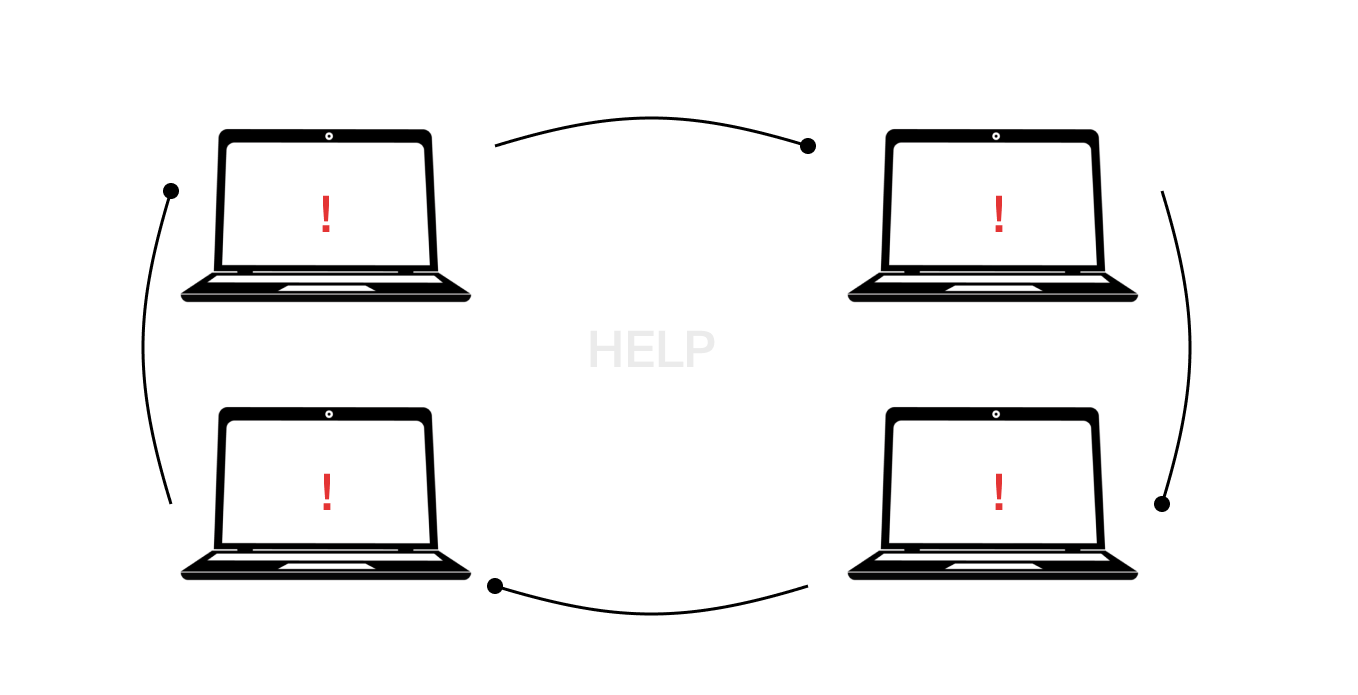

Fluence пытается принести децентрализованный аналог традиционному сервису для девелоперов. Чтобы начать пользоваться Fluence, нужно просто написать функции, которые будут имплементировать бэкэнд вашего приложения, и задеплоить их в нашей сети. Девелоперы могут деплоить на одного и несколько провайдеров. Дальше Fluence дает несколько встроенных фичей, например, Fault Tolerance.

По дефолту всё, что вы деплоите, деплоится сразу на несколько провайдеров с помощью алгоритма координации. Fluence дает тулзы для того, чтобы настраивать и программировать Fault Tolerance, scalability и всё, что нужно на бэкенде.

Всё это можно описать на нашем языке программирования — Aqua. Он сделан специально для того, чтобы создавать секьюрно-распределенные системы.

Я могу запрограммировать то, как мой бэкенд и разные инстансы или функции, задеплоенные на разных провайдерах, будут вести себя вместе, чтобы получилось то, что именно мне нужно. Часть из этого будет просто забилдено во Fluence по дефолту. Сейчас, например, есть Fault Tolerance, но нет фичи Autoscaling. И за всё это можно будет платить стейблами на блокчейне. Ну, или любыми другими ERC-токенами.

Еще одна важная вещь, которую мы делаем, — пруфы на вычисления. Они помогают избегать ситуаций, когда нужно строить систему репутации провайдеров. Мы, по сути, делаем более строгую модель, в которой можно положиться на корректное исполнение кода во Fluence. Блокчейн тоже гарантирует это благодаря консенсусу, но в блокчейне сотни и тысячи нод исполняют одни и те же вычисления, чтобы обеспечить безопасность через консенсус. А во Fluence нет глобального консенсуса, но есть пруфы корректного исполнения кода. Когда вы деплоите функцию на несколько провайдеров, вы можете опционально добавить консенсус, но по факту это делает исполнение дороже.

Сейчас Fluence на этапе приватного тестнета. У нас есть девелоперский тулинг и платежи, но пока нет компьютерных провайдеров на маркетплейсе. Если сейчас вы захотите использовать Fluence, то придется работать с нодами, которые предоставляем именно мы. Бесплатно, конечно. Дальше мы планируем двигаться к публичному тестнету и онбордить компьютерных провайдеров, а потом — в Мейннет с живой экономикой и платежами.

Есть вот Filecoin — децентрализованный маркетплейс сторедж-провайдеров. Там есть интерфейс для клиента, в который загружаются файлы. Есть механизм инсентивов, что провайдеры обязуются хранить файлы в течение какого-то времени. Если они этого не делают, то они теряют стейк. А если хранят, то доказывают это пруфами. Это как раз очень похоже на нас.

У нас тоже есть маркетплейс провайдеров просто с другим железом. И точно так же есть система пруфов — просто с точки зрения девелоперов это немного другой продукт и уровень.

Есть еще, например, Akash Network. Но у них всё попроще. Они тоже делают маркетплейс, но по сути он мэтчит клиента с каким-то провайдером. При этом нет гарантий, что провайдер, например, не уйдет в оффлайн.

Про распределенные вычисления и хранение

Мне кажется, что децентрализованные распределенные вычисления сложнее, потому что количество юзкейсов и форматов, упаковок и тасков сильно отличается.

База данных — тоже больше про вычисления, нежели про хранение. Это и сторедж, и компьютинг. Но в индустрии про сторедж начали думать раньше всего. Например, IPFS начался примерно в 2015 году, у них была первая White paper. Про компьютинг стали думать позже. А более менее юзабельные продукты начали появляться только сейчас. Причем они не все запускаются на чейне, но дают адекватный продукт, который девелоперы могут использовать.

Вообще инфраструктуру делать довольно сложно. Много задач, которые нужно довести до какого-то простого уровня. Вот мы собрали маркетплейс, а вы деплойте, что хотите, но до юзабельного продукта. А это когда у кастомера есть гарантии, что код, который он хочет исполнить, будет исполнен, а у провайдера, который исполняет код, есть гарантии, что этот код не вылезет из контейнера и не сломает ему операционную систему.

Нам пришлось изобретать много низкоуровневых вещей. В частности, мы сделали Aqua — это такой distribute execution protocol и domain specific language. На нем можно писать скрипты, которые описывают распределенные вычисления в терминах нод и функций, которые на них должны быть исполнены с добавлением условий, операторов, циклов и так далее. Aqua — это такой аналог AWS Step Functions, который можно секьюрно запускать в публичой P2P-сети и который дает больше языковых фич.

На каждой Fluence-ноде есть виртуальная машина, которая исполняет язык Aqua. Его сервят все ноды. Каждая из них сервит входящие реквесты через Aqua. А каждый реквест — это дата-пакет, в котором содержится скрипт, который описывает, как именно реквест исполняется в разных функциях на разных пирах. Так мы делаем инфраструктуру, которая может выполнять распределенные алгоритмы, которые не нужно передеплоивать каждый раз на все ноды или часть нод. Потому что эти алгоритмы программируются внутрь реквестов, которые изначально откуда-то исходят.

Самый простой пример, что можно сделать — P2P- чат между двумя девайсами, которые будут использовать Fluence-сеть по середине. В таком случае клиент Fluence на одном девайсе будет создавать дата-реквест, который будет говорить: «Пойди на такую-то ноду и исполни такую-то функцию, а результат функции отправь на тот девайс». Так мы передадим сообщение с одного девайса на другой через Fluence-ноду. Но дальше всё сильно усложняется.

Наша основная сложность — в изобретении низкоуровневой инфраструктуры без клауда. Мы не пользуемся клауд-тулзами, потому что они созданы для трастед-инфраструктуры, где кто-то заправляет всем сетапом. Нам же нужно было сделать систему, которая может существовать без центрального админа.

Про вклад Multicoin Capital [object Object] в распределенную инфраструктуру

Они верят в распределенную инфраструктуру и имеют возможность инвестировать в наиболее адекватные проекты в этой сфере. Когда мы анонсировали наш раунд, Ceramic тоже его анонсировали. А это распределенная база данных. Задача реализовать такую идею летала много лет, но Ceramic — одни из тех, кто правильно к этой задаче подобрались. И их решение многим нравится. Поэтому Multicoin в них инвестируют.

В портфолио Multicoin есть RenderToken — проект по рендерингу видео и всего остального на GPU. Вот Ethereum перешел на Proof-of-Stake и, вроде как, много GPU освобождается, потому что доходность майнинга на других чейнах гораздо ниже. И вот они ищут, куда их применить. Некоторые проекты стараются адрессить провайдеров на этих GPU, чтобы рендерить на них видео или делать машинное обучение.

Multicoin также инвестировали в проект, который делает распределенный CDN. Тоже идея, которая плавает вокруг в течение многих лет. Но нет четкого лидера с пропозишном.

Кайл, фаундер Multicoin Capital, достаточно четко пушит примерно одну и ту же мысль — у Fluence должны быть четкие юзкейсы и понимания насчет кастомеров. Кайл всегда советовал не распыляться на всё подряд и не делать платформу для всего, а фокусироваться на конкретных сегментах и понимать свой рынок.

Мы позиционируем себя как приватный тестнет, а не как продакшн. Прямо сейчас мы не стремимся онбордить максимальное количество кастомеров и форсить их запускать настоящие продукты на Fluence. У нас много партнерств и экспериментов, но мы не пушим это.

В будущем мы хотим заадресить всех клауд-юзеров, которые пользуются, например, AWS Lambda. То есть, юзкейсы Fluence похожи на юзкейсы клауд-функций — это дата-пайплайны, дата-процессинги, всякие боты и так далее.

FLuence полезен web3 в качестве оракулов — он может индексировать чейны и делать ботов. Но там частично не хватает фичей, например, поддержки популярных языков программирования, таких как Python или Javascript, на которых можно писать функции, чтоб деплоить на Fluence.

Мы все компилируем в WebAssembly, поэтому сейчас поддерживаются Rust и C++, но люди хотят Python и JavaScript. Мы работаем над тем, чтоб Python точно был. Это сильно увеличит количество возможностей и снизит порог входа для девелоперов. А сейчас многие не пользуются Fluence, именно потому что просто не знают Rust.

Также во Fluence юзеры могут выбирать провайдеров. Есть алгоритмы, которые эту магию прячут под капот. У нас пока нет фильтров для выбора провайдеров по геолокации и хардверу, потому что это нетривиальные задачи. Как проверить, что сервера провайдера действительно в Европе, если он заявляет об этом? Или что у него мощное железо — как проверить, так ли это?

Мы хотим сделать Proof-of-Capacity — это про награду за аллокацию определенных вычислительных ресурсов в сеть. С помощью технологии можно доказать, что у тебя есть определенное количество ресурсов, которое определяется CPU memory. Вот к такой технологии хотелось бы в будущем прийти.

Про провайдеров и даунсайды

Технически и с ноутбуком можно стать провайдером. Но вряд ли кто-то это оценит. Ноутбуки медленные и могут случайно отключиться. Но сам по себе протокол ничем не ограниченный, и если у кого-то есть целая сеть связанных между собой ноутбуков, которые готовы за дешево выполнять таски, — то окей.

Вопрос только спроса. Мы фокусируемся на задачах, когда есть, например, бэкенд сервиса, и он должен работать быстро. А в таком случае не все ноутбуки подойдут. Но, если мы ищем инопланетян, то почему нет.

Основной барьер именно в девелоперском экспириенсе. Вот есть Vercel, который фрондендеры и джаваскриптеры используют, чтобы деплоить джаваскрипт-приложения. Там появились функции, которые под капотом исполняются в AWS Lambda. Настолько AWS интегрирован во многие сервисы. То есть люди используют инфраструктуру Amazon даже не напрямую, а через много разных сервисов.

Heroku, например, позволяет деплоить что угодно тоже в Amazon. В этом смысле сейчас стейт Fluence и других децентрализованных технологий на таком уровне интеграций не существует. Поэтому даже тулинг, который существует вокруг Filecoin, очень базовый.

А люди привыкли даже не с Amazon API работать, а через прослойку, которая упрощает разработку. Такого экспириенса именно в web3-инфраструктуре нет. Но это дойдет. Нужно какое-то время — допилить базовые тулзы и начать делать интеграции с такими штуками, которые упрощают девелоперский экспириенс.

Вопрос провайдеров, которые пересдают ноды AWS под видом нод Fluence, решается легко чисто экономически. Когда в сеть приходит провайдер со своими дата-центрами, он быстро демпингует таких перекупщиков, потому что у него косты гораздо ниже, чем у них.

Например, на Amazon есть несколько типов прайсинга: дефолтные, подешевле и есть спотовые цены за ресурсы, которые доступны только ограниченный период времени. Эти временные ресурсы гораздо дешевле. Самое дешевое железо, которое можно получить на Amazon, — по спот-ценам. Если придумать алгоритм, как покупать железо быстро и потом мигрировать на другое, можно иметь самую дешевую инфраструктуру из Amazon. Но даже с таким прайсингом железо будет обходиться дороже, чем собственные дата-центры.

Нет ничего плохого, если люди раннят Fluence-ноды, покупая железо на Amazon. Всё равно можно деплоить код и всё равно вычислительные ресурсы есть. Но это не решает нашу проблему децентрализации. Просто чем больше спрос, тем больше приходит профессиональных провайдеров. И как только мы доходим до уровня, когда у нас есть дата-центры, тогда уже преселить Amazon становится невыгодно.

Про будущее децентрализованного компьютинга

На самом деле это всё постепенно развивается. За последние годы было много ситуаций, когда какая-нибудь цензура происходила где угодно: GitHub банил аккаунты или проекты, Twitter начинал творить непонятно что. Как только большая корпорация цензурирует своих пользователей, или государство форсит корпорацию цензурировать пользователей, сразу идет волна, чтоб защитить эту инфраструктуру.

В целом, спрос на уменьшение platform risk у бизнесов существует давно. Вопрос в том, есть ли решение для этого. Вот я хочу сегодня снизить риск и переехать с Amazon. А куда? Ну, могу сервера в дата-центрах поднять. И что? Вернуться на тот уровень, где все были 20 лет назад до изобретения клауда? Я хочу те фичи и легкость, с которой я управляю своей инфраструктурой в клауде, но с меньшими рисками к цензуре.

Наш фокус сейчас на том, чтоб доделать всё как можно быстрее. Мы хотим активировать больший спрос на Fluence и децентрализованную инфраструктуру в целом. Это первое. Второе — сейчас работают эффективные экономики, которые блокчейны позволяют создавать. Поскольку мы знаем, что у Amazon и других клаудов большая маржа на клауд-сервисах, мы уверены, что стоимость вычислений и пользования Fluence будет ниже, чем у других клаудов, а экспириенс — больше.

Цена — существенный фактор для бизнесов, которые пользуются клаудами. Если современный бизнес ими пользуется, то они тратят много денег на это, и оптимизировать затраты для них будет только в радость.

Мы верим, что можем конкурировать в двух областях: в platform risk и низких ценах. Насколько ниже — пока сказать не могу, но надеемся, что раз в 10 точно.

Про комьюнити и токен Fluence

Сейчас мы таргетим девелоперов как комьюнити. Но комьюнити же широкое понятие — и в нем есть разные роли. Когда мы соберемся в Мейннет, у нас появятся компьютерные провайдеры и токен-холдеры — люди, которые будут участвовать в DAO и гаверненесе.

Сейчас мы партнеримся с девелоперскими комьюнити. А еще делаем ивенты и спонсируем хакатоны. Недавно делали партнершип с Developer DAO в рамках конференции Denver. Еще есть пока не запущенные партнерства в Индии. Мы стараемся продвинуть Fluence на слуху, даже если проект в каких-то смыслах не готов для продакшна. Нужно, чтобы люди пробовали и фидбэчили.

На хакатонах у нас бывают баунти-программы. Прямо сейчас нет списка живущих баунти вне хакатонов, но мы хотим к этому прийти. В web3 это абсолютно нормальная тема, когда у проектов есть баунти, и их используют для привлечения девелоперов.

Мейннет означает для нас запуск компьютерных маркетплейсов и платежей на чейне. Точно будем запускать это на EVM, но на каком именно чейне — пока решаем. И токен точно будет. Мы таргетим Мейннет в этом году и очень надеемся успеть. Но это как всегда планы. Хотя мы серьезно намерены их воплотить.

Трек: Web3-разработчик

От основ JS до своих смарт-контрактов